Warrantless wiretapping is back in the news, thanks largely to

Michael Isikoff's cover piece in the December 22 issue of Newsweek. We now know that the principal source for James Risen and Eric Lichtblau's Pulitzer Prize winning

article that broke the story three years ago in the New York Times was a Justice department official named Thomas M. Tamm.

Most of the current attention, naturally,

has focused on Tamm and on whether, as Newsweek's tagline put it, he's

"a hero or a criminal". Having never in my life faced an ethical dilemma on the magnitude of

Tamm's -- weighing betrayal of one trust against the service of another -- I can't

help but wonder what I'd have done in his shoes.

Whistleblowing is inherently difficult, morally ambiguous territory. At best there are

murky shades of gray, inevitably viewed through the myopic lenses

of individual loyalties, fears, and ambitions, to say nothing of the prospect of

life-altering consequences that might accompany exposure.

Coupled with the high

stakes of national security and civil liberties, it's hard not to think about

Tamm in the context of another famously anonymous source, the late

Mark Felt (known to a generation only as Watergate's "Deep Throat").

Warrantless wiretapping is back in the news, thanks largely to

Michael Isikoff's cover piece in the December 22 issue of Newsweek. We now know that the principal source for James Risen and Eric Lichtblau's Pulitzer Prize winning

article that broke the story three years ago in the New York Times was a Justice department official named Thomas M. Tamm.

Most of the current attention, naturally,

has focused on Tamm and on whether, as Newsweek's tagline put it, he's

"a hero or a criminal". Having never in my life faced an ethical dilemma on the magnitude of

Tamm's -- weighing betrayal of one trust against the service of another -- I can't

help but wonder what I'd have done in his shoes.

Whistleblowing is inherently difficult, morally ambiguous territory. At best there are

murky shades of gray, inevitably viewed through the myopic lenses

of individual loyalties, fears, and ambitions, to say nothing of the prospect of

life-altering consequences that might accompany exposure.

Coupled with the high

stakes of national security and civil liberties, it's hard not to think about

Tamm in the context of another famously anonymous source, the late

Mark Felt (known to a generation only as Watergate's "Deep Throat").

But an even more interesting revelation -- one ultimately far more troubling -- can be found in a regrettably less prominent sidebar to the main Newsweek story, entitled "Now we know what the battle was about", by Daniel Klaidman. Put together with other reports about the program, it lends considerable credence to claims that telephone companies (including my alma matter AT&T) provided the NSA with wholesale access to purely domestic calling records, on a scale beyond what has been previously acknowledged.

The sidebar casts new light on one of the more dramatic episodes to leak out of Washington in recent memory; quoting Newsweek:

It is one of the darkly iconic scenes of the Bush Administration. In March 2004, two of the president's most senior advisers rushed to a Washington hospital room where they confronted a bedridden John Ashcroft. White House chief of staff Andy Card and counsel Alberto Gonzales pressured the attorney general to renew a massive domestic-spying program that would lapse in a matter of days. But others hurried to the hospital room, too. Ashcroft's deputy, James Comey, later joined by FBI Director Robert Mueller, stood over Ashcroft's bed to make sure the White House aides didn't coax their drugged and bleary colleague into signing something unwittingly. The attorney general, sick and pain-racked from a rare pancreatic disease, rose up from his bed, gathering what little strength he had, and firmly told the president's emissaries that he would not sign their papers.Like most people, I had assumed that the incident concerned the NSA's interception (without the benefit of court warrants) of the contents of telephone and Internet traffic between the US and foreign targets. That program is at best a legal gray area, the subject of several lawsuits, and the impetus behind Congress' recent (and I think quite ill-advised) retroactive grant of immunity to telephone companies that provided the government with access without proper legal authority.White House hard-liners would make one more effort -- getting the president to recertify the program on his own, relying on his powers as commander in chief. But in the end, with an election looming and the entire political leadership of the Justice Department poised to resign rather than carry out orders they thought to be illegal, Bush backed down. The rebels prevailed.

But that, apparently, wasn't was this was about at all. Instead, again quoting Newsweek:

Two knowledgeable sources tell NEWSWEEK that the clash erupted over a part of Bush's espionage program that had nothing to do with the wiretapping of individual suspects. Rather, Comey and others threatened to resign because of the vast and indiscriminate collection of communications data. These sources, who asked not to be named discussing intelligence matters, describe a system in which the National Security Agency, with cooperation from some of the country's largest telecommunications companies, was able to vacuum up the records of calls and e-mails of tens of millions of average Americans between September 2001 and March 2004. The program's classified code name was "Stellar Wind," though when officials needed to refer to it on the phone, they called it "SW." (The NSA says it has "no information or comment"; a Justice Department spokesman also declined to comment.)While it may seem on the surface to involve little more than arcane and legalistic hairsplitting, that the battle was about records rather than content is actually quite surprising. And it raises new -- and rather disturbing -- questions about the nature of the wiretapping program, and especially about the extent of its reach into the domestic communications of innocent Americans.

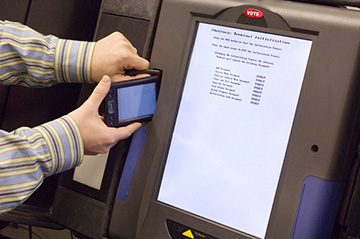

There have been a number of recent reports of touchscreen

voting machines "flipping" voters' choices in early voting in the

US Presidential election. If true, that's a very

serious problem, apparently confirming everyone's worst fears about

the reliability and security of the technology. So what

should we make of these reports, and what should we do?

There have been a number of recent reports of touchscreen

voting machines "flipping" voters' choices in early voting in the

US Presidential election. If true, that's a very

serious problem, apparently confirming everyone's worst fears about

the reliability and security of the technology. So what

should we make of these reports, and what should we do?

In technical terms, many of the problems being reported may be related to mis-calibrated touch input sensors. Touchscreen voting machines have to be adjusted from time to time so that the input sensors on the screen correspond accurately to the places where the candidate choices are displayed. Over time and in different environments, these analog sensors can drift away from their proper settings, and so touchscreen devices generally have a corrective "calibration" maintenance procedure that can be performed as needed. If a touchscreen is not properly accepting votes for a particular candidate, there's a good chance that it needs to be re-calibrated. In most cases, this can be done right at the precinct by the poll workers, and takes only a few minutes. Dan Wallach has an excellent summary (written in 2006) of calibration issues on the ACCURATE web site. The bottom line is that voters should not hesitate to report to poll workers any problems they have with a touchscreen machine -- there's a good chance it can be fixed right then and there.

Unfortunately, the ability to re-calibrate these machines in the field is a double edged sword from a security point of view. The calibration procedure, if misused, can be manipulated to create exactly the same problems that it is intended to solve. It's therefore extremely important that access to the calibration function be carefully controlled, and that screen calibration be verified as accurate. Otherwise, a machine could be deliberately (and surreptitiously) mis-calibrated to make it difficult or impossible to vote for particular candidates.

Is this actually happening? There's no way to know for sure at this point, and it's likely that most of the problems that have been reported in the current election have innocent explanations. But at least one widely used touchscreen voting machine, the ES&S iVotronic, has security problems that make partisan re-calibration attacks a plausible potential scenario.

A group of MIT students made news last week with their discovery of insecurities in Boston's "Charlie" transit fare payment system [pdf]. The three students, Zack Anderson, R.J. Ryan and Alessandro Chiesa, were working on an undergraduate research project for Ron Rivest. They had planned to present their findings at the DEFCON conference last weekend, but were prevented from doing so after the transit authority obtained a restraining order against them in federal court.

The court sets a dangerous standard here, with implications well beyond MIT and Boston. It suggests that advances in security research can be suppressed for the convenience of vendors and users of flawed systems. It will, of course, backfire, with the details of the weaknesses (and their exploitation) inevitably leaking into the underground. Worse, the incident sends an insidious message to the research community: warning vendors or users before publishing a security problem is risky and invites a gag order from a court. The ironic -- and terribly unfortunate -- effect will be to discourage precisely the responsible behavior that the court and the MBTA seek to promote. The lesson seems to be that the students would have been better off had they simply gone ahaed without warning, effectively blindsiding the very people they were trying to help.

The Electronic Frontier Foundation is representing the students, and as part of their case I (along with a number of other academic researchers) signed a letter [pdf] urging the judge to reverse his order.

Update 8/13/08: Steve Bellovin blogs about the case here.

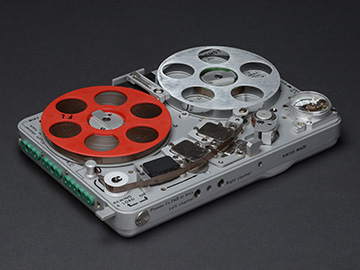

Over-engineered surveillance gadgetry has always held a special (if somewhat perverse, given my professional interests) fascination for me. As a child, I understood that the best job in the world belonged to Harry Caul (and as an adult, it was a thrill to finally meet his real-life counterpart, countermeasures expert Marty Kaiser, last week).

Over-engineered surveillance gadgetry has always held a special (if somewhat perverse, given my professional interests) fascination for me. As a child, I understood that the best job in the world belonged to Harry Caul (and as an adult, it was a thrill to finally meet his real-life counterpart, countermeasures expert Marty Kaiser, last week).

So perhaps it was inevitable when recently, facing a low-grade but severely geeky midlife crisis, I recaptured my youth with the Maserati of 70's spy gear: the Nagra SNST (see photo at right). For decades, this miniature reel-to-reel audio recorder, specially optimized for eavesdropping, was the standard surveillance device, used by just about every law enforcement and intelligence agency that could afford the money-is-no-object price tag. Slightly larger than two iPods, the SNST runs virtually silently for over six hours on two AA batteries, and can record about two hours of voice-grade stereo audio on a 2.75 inch reel of 1/8 inch wide tape. Now largely made obsolete by soulless digital models, the Nagras are built more like Swiss watches than tape recorders. And trust me, now that I own one, I feel twenty years younger.

I bought mine on the surplus market and ended up with a unit from the Missouri State Highway Patrol, where it had been used in drug and other investigations until at least 1996. Why do I know so much about its history?

Because my new surveillance recorder came with a tape.

I had assumed the tape would be blank or erased, but before recording over it a few days ago, I decided to give it a listen just to be sure. Much to my surprise, it wasn't blank at all, but contained a message from the past: "February 8, 1996, I'm Trooper Blunt, Missouri State Highway Patrol..."

The tape, it turns out, was an old evidence recording of a confidential informant being sent out to try to purchase some methamphetamine. But the informant's identity isn't so "confidential" after all: his name, and the name of the guy he was to buy the drugs from, was given right there at the beginning of the tape. The tape they'd eventually sell me a dozen years later.

I made an MP3 of the recording; it's about 42 minutes long and, I must admit, as crime drama goes it's a letdown. It consists almost entirely of the sound of the informant driving to and from the buy location, with no actual transaction captured on tape. No intricate criminal negotiations or high-speed car chases here, I'm afraid. So, although the recording is fairly long, all the actual talking is in the first few minutes, where the officer gives last-minute instructions to the informant. But just in case someone involved still harbors a grudge after 12 years, I've muted out the names of the informant and the suspect from the audio stream. You can listen to the audio here [.mp3 format].

Unfortunately, this isn't the first time that confidential police data has leaked out in this and other ways, and it no doubt won't be the last. Law enforcement agencies routinely do a bad job redacting names and other sensitive information from electronic documents; in May, I discovered deleted figures hidden in the PDF of a Justice Department report on wiretapping. And a few years ago, when my lab was acquiring surplus telephone interception devices for our work on wiretapping countermeasures, some of the equipment we purchased (on eBay) contained old intercept recordings and logs or was configured with suspects' telephone numbers.

None of this should be terribly surprising. It's becoming harder and harder to destroy data, even when it's as carefully controlled as confidential legal evidence. Aside from copies and backups made in the normal course of business, there's the problem of obsolete media in obsolete equipment; there may be no telling what information is on that old PC being sent to the dump, where it might end up, or who might eventually read it. More secure storage practices -- particularly transparent encryption -- can help here, but they won't make the problem go away entirely.

Once sensitive or personal data is captured, it stays around forever, and the longer it does, the more likely it is that it will end up somewhere unexpected. This is one reason why everyone should be concerned about large-scale surveillance by law enforcement and other government agencies; it's simply unrealistic to expect that the personal information collected can remain confidential for very long.

And whatever you do, should you find yourself becoming an informant for the Missouri Highway Patrol, you might want to consider using an alias.

MP3 audio here.

Photo: My new Nagra SNST; hi-res version available on Flickr.

I had a great time yesterday at David Byrne's Playing the Building auditory installation (running through August in the Battery Maritime Building in lower Manhattan). It involves an old organ console placed in the

middle of a semi-abandoned ferry terminal with various actuators hooked up throughout the building. The structure itself -- its pipes, columns, and so on -- makes the actual sound, under the control of whoever is

at the console. You can read more about the project at davidbyrne.com.

I had a great time yesterday at David Byrne's Playing the Building auditory installation (running through August in the Battery Maritime Building in lower Manhattan). It involves an old organ console placed in the

middle of a semi-abandoned ferry terminal with various actuators hooked up throughout the building. The structure itself -- its pipes, columns, and so on -- makes the actual sound, under the control of whoever is

at the console. You can read more about the project at davidbyrne.com.

Anyone can just go in and spend a few minutes playing the building. There's no real way to prepare ahead of time or directly apply expertise with another instrument; to make sound you have to experiment. So every performance by a visitor is by necessity an at least somewhat playful exploration. (There are apparently also occasional scheduled performances by musicians who've actually rehearsed with the contraption, but there wasn't one while I was there yesterday).

The result is surprisingly successful at blurring the distinctions between performer and audience, professional and amateur, work and play, signal and noise. An almost incidental side effect is some interesting, and occasionally hauntingly beautiful, ambient music. It reminded me of some of the early field recordings of Tony Schwartz (a terrific body of work I discovered, sadly, through his recent obituary on WNYC's "On the Media").

Given the nature of the piece, it was a bit incongruous to see almost everyone taking pictures of the console and the space, but hardly anyone recording the sound itself, at least while I was there. Presumably this has something to do with the relative ubiquity of small cameras versus small audio recorders, but I suspect there's more to it than that. The commercial and artistic establishment routinely prohibits "amateur" recording in "professional" performance spaces, and we've become conditioned to assume that that's just the natural order of things. (We're also expected to automatically consent to being recorded ourselves while in those same spaces, but maybe that's another story.) Amateur documentary field recording seems in danger of withering away even as the technology to do it becomes cheaper, better, and more available. In fifty years will we be able to find out what daily life in the early part of this century really sounded like?

Anyway, bucking this trend I happened to have a little pocket digital recorder with me and so I made a couple of brief recordings. (Here, I was cheerfully told, recording is perfectly fine.) Every minute or two a different (anonymous) visitor is at the console (there was a steady line). Most people played with a partner; a few soloed.

Each 256Kbps stereo .mp3 file is about 12 minutes long and about 21MB. I'll post the (huge) uncompressed PCM .wav files to freesound.org shortly.

- Track 1: Left-Right, 3:15pm [.mp3 audio]

Recorded at the center of the main room, facing toward the organ console. There are occasional footsteps, people talking, children running and laughing, etc. (which, I think, are best understood as being part of the "performance"), but the dominant sound here is the building itself being played. This perspective approximates being in the "audience".

.

.

- Track 2: Rear-Front, 3:30pm [.mp3 audio]

Recorded near the console, oriented left channel toward the rear of the room and right toward the front. That is, the stereo image is rotated 90 degrees from the above and the mic position is much closer to the person playing. It includes more (and louder) talking and other sound from audience members, and because of the position some of the building sounds that would be quite loud in the center of the room are barely audible here. This perspective approximates what one hears while actually playing the building from the organ console.

(Note that these were not recorded at the same time; I only had the one recorder with me).

Technical note: All sound was recorded on July 5, 2008 with a handheld Nagra ARES-M miniature digital recorder via the "green band" clip-on XY microphone, in 16bit/48KHz/1536Kbps stereo PCM mode (converted to MP3 with Logic Pro 8).

I was lucky enough to be invited to the first Interdisciplinary Workshop on Security and Human Behavior at MIT this week. Organized by Alessandro Acquisti, Ross Anderson, George Lowenstein, and Bruce Schneier, the workshop brought together an aggressively diverse group of 42 researchers from perspectives across computing, psychology, economics, sociology, philosophy and even photography and skepticism. As someone long interested in security on the human scale [pdf], it was exciting to meet so many like minded people from outside my own field. And judging from the comments on Ross' and Bruce's blogs, there's a lot more interest in this subject than from just the attendees.

There wasn't a single climactic insight or big result from the workshop; the participants mainly gave overviews of their fields or talked about their previously published work. The point was to get people with similar interests but widely different backgrounds talking (and hopefully collaborating) with one another, and it succeeded amazingly well at that. I overheard someone (accurately) comment that many of the kinds of conversations that usually take place in the hallway or the bar at most conferences were taking place in the sessions here.

This was a small and informal event, with no published proceedings or other tangible record, but I made quick-and-dirty sound recordings of most of the sessions, which I'll put up here as I process them.

I apologize for the uneven sound quality (the Frank Gehry sculpture in which the workshop was held was clearly not designed with acoustics in mind, and the speakers weren't always standing near my recorder's microphone on the podium). Audience comments in particular may be inaudible. Keep in mind that these are all big 90 minute MP3 files, about 40MB each, so they are definitely not for the bandwidth-deprived. For concise summaries of the sessions, see Ross Anderson's excellent live-blogged notes here.

Update 7/1/08 8pm: I'm heading back home from the conference now, with all the sound from yesterday already online below. I should have today's files (except the last session) up by late tonight or early tomorrow.

Update 7/1/08 11pm: I've uploaded the rest of the conference audio (except for the final session), all of which is linked from the agenda below. Unfortunately, I had to leave just before the last session (Session 8), so there's no audio for that one; sorry.

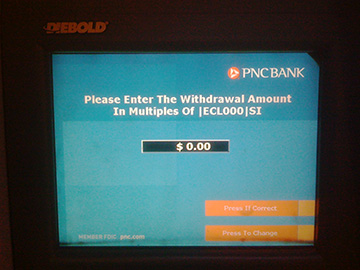

If ordinary bank ATMs can be made secure and reliable, why can't electronic voting machines? It's a simple enough question, but, sadly, the answer isn't so simple. Secure voting is a much more complex technical problem than electronic banking, not least because a democratic election's dual requirements for ballot secrecy and transparent auditability are often in tension with one another in the computerized environment. Making ATMs robust and resistant to thieves is easy by comparison.

If ordinary bank ATMs can be made secure and reliable, why can't electronic voting machines? It's a simple enough question, but, sadly, the answer isn't so simple. Secure voting is a much more complex technical problem than electronic banking, not least because a democratic election's dual requirements for ballot secrecy and transparent auditability are often in tension with one another in the computerized environment. Making ATMs robust and resistant to thieves is easy by comparison.

But even ATMs aren't immune from obscure and mysterious failures. I was reminded of this earlier today when I tried to make a withdrawal at a PNC Bank cash machine in Philadelphia. When I reached the screen for selecting the amount of cash I wanted, I was prompted to "Please Enter The Withdrawal Amount In Multiples of |ECL000|SI". Normally, the increment is $10 or $20, and |ECL000|SI isn't a currency denomination with which I'm at all familiar. See the photo at right.

Obviously, something was wrong with the machine -- its hardware, its software or its configuration -- and on realizing this I faced a dilemma. What else was wrong with it? Do I forge ahead and ask for my cash, trusting that my account won't be completely emptied in the process? Or do I attempt to cancel the transaction and hope that I get my card back so I could try my luck elsewhere? Complicating matters was the looming 3-day weekend, not to mention the fact that I was about to leave for a trip out of the country. If my card got eaten, I'd end up without any simple way to get cash when I got to my destination. Wisely or not, I decided to hold my breath and continue on, hoping that this was merely an isolated glitch in the user interface, limited to that one field.

Or not. I let out an audible sigh of relief when the machine dispensed my cash and returned my card. But it also gave me (and debited my account) $10 more than I requested. And although I selected "yes" when asked if I wanted a receipt, it didn't print one. So there were at least three things wrong with this ATM (the adjacent machine seemed to be working normally, so it wasn't a systemwide problem). Since there was an open bank branch next door, I decided to report the problem.

The assistant branch manager confidently informed me that the ATMs have been working fine, that there was no physical damage to it, and so I must have made a mistake. No, there was no need to investigate further; no one has complained before, and if I hadn't lost any money, what was I worried about? When I tried to show her the screen shot on my phone, she ended the conversation by pointing out that for security reasons, photography is not permitted in the bank (even though the ATM in question wasn't in the branch itself). It was like talking to a polite brick wall.

Such exchanges are maddeningly familiar in the security world, even when the stakes are far higher than they were here. Once invested in a complex technology, there's a natural tendency to defend it even when confronted with persuasive evidence that it isn't working properly. Banking systems can and do fail, but because the failures are relatively rare, we pretend that they never happen at all; see the excellent new edition of Ross Anderson's Security Engineering text for a litany of dismaying examples.

But knowing that doesn't make it any less frustrating when flaws are discovered and then ignored, whether in an ATM or a voting machine. Perhaps the bank manager could join me for a little game of Security Excuse Bingo [link].

Click the photo above for its Flickr page.

N.B.: Yes, the terminal in question was made by Diebold, and yes, their subsidiary, Premier Election Systems, has faced criticism for problems and vulnerabilities in its voting products. But that's not an entirely fair brush with which to paint this problem, since without knowing the details, it could just as easily have been caused entirely by the bank's software or configuration.

Readers of this blog may recall that in the Fall of 2005, my graduate students (Micah Sherr, Eric Cronin, and Sandy Clark) and I discovered that the telephone wiretap technology commonly used by law enforcement agencies can be misled or disabled altogether simply by sending various low-level audio signals on the target's line [link our full pdf paper here]. Fortunately, certain newer tapping systems, based on the 1994 CALEA regulations, have the potential to neutralize these vulnerabilities, depending on how they are configured. Shortly after we informed the FBI about our findings, an FBI spokesperson reassured the New York Times that the problem was now largely fixed and affects less than 10 percent of taps [link].

Readers of this blog may recall that in the Fall of 2005, my graduate students (Micah Sherr, Eric Cronin, and Sandy Clark) and I discovered that the telephone wiretap technology commonly used by law enforcement agencies can be misled or disabled altogether simply by sending various low-level audio signals on the target's line [link our full pdf paper here]. Fortunately, certain newer tapping systems, based on the 1994 CALEA regulations, have the potential to neutralize these vulnerabilities, depending on how they are configured. Shortly after we informed the FBI about our findings, an FBI spokesperson reassured the New York Times that the problem was now largely fixed and affects less than 10 percent of taps [link].

Newly-released data, however, suggest that the FBI's assessment may have been wildly optimistic. According to a March, 2008 Department of Justice audit on CALEA implementation [pdf], about 40 percent of telephone switches remained incompatible with CALEA at the end of 2005. But it may be even worse than that; it's possible that many of the other 60 percent are vulnerable, too. According to the DoJ report, the FBI is paying several telephone companies to retrofit their switches with a "dial-out" version of CALEA. But as we discovered when we did our wiretapping research, CALEA dial-out has backward compatibility features that can make it just as vulnerable as the previous systems. These features can sometimes be turned off, but it can be difficult to reliably do so. And there's nothing in the extensive testing section of the audit report to suggest that CALEA collection systems are even tested for this.

By itself, this could serve as an object lesson on the security risks of backward compatibility, a reminder that even relatively simple things like wiretapping systems are difficult to get right without extensive review. The small technical details matter a lot here, which is why we should always scrutinize -- carefully and publicly -- new surveillance proposals to ensure that they work as intended and don't create subtle risks of their own. (That point is a recurring theme here; see this post or this post, for example.).

But that's not the most notable thing about the DoJ audit report.

It turns out that there's sensitive text hidden in the PDF version of the report, which is prominently marked "REDACTED - FOR PUBLIC RELEASE" on each page. It seems that whoever tried to sanitize the public version of the document did so by pasting an opaque PDF layer atop the sensitive data in several of the figures (e.g., on page 9). This is widely known to be a completely ineffective redaction technique, since the extra layer can be removed easily with Adobe's own Acrobat software or by just cutting and pasting text. In this case, I discovered the hidden text by accident, while copying part of the document into an email message to one of my students. (Select the blanked-out subtitle line in this blog entry to see how easy it is.) Ironically, the Justice Department has suffered embarrassment for this exact mistake at least once before: two years ago, they filed a leaky pdf court document that exposed eight pages of confidential material [see link].

This time around, the leaked "sensitive" information seems entirely innocuous, and I'm hard pressed to understand the justification for withholding it in the first place (which is why I'm comfortable discussing it here). Some of the censored data concerns the FBI's financial arrangements with Verizon for CALEA retrofits of their wireline network (they paid $2,550 each to upgrade 1,140 older phone switches; now you know).

A bit more interesting was a redacted survey of federal and state law enforcement wiretapping problems. In 2006, more than a third of the agencies surveyed were tapping (or trying to tap) VoIP and broadband services. Also redacted was the fact that that law enforcement sees VoIP, broadband, and pre-paid cellular telephones as the three main threats to wiretapping (although the complexities of tapping disposable "burner" cellphones are hardly a secret to fans of TV police procedurals such as The Wire). Significantly, there's no mention of any problems with cryptography, the FBI's dire predictions to the contrary during the 1990s notwithstanding.

But don't take my word for it. A partially de-redacted version of the DoJ audit report can be found here [pdf]. (Or you can do it yourself from the original, archived here [pdf])

The NSA has a helpful guide to effective document sanitation [pdf]; perhaps someone should send a copy over to the Justice department. Until then, remind me not to become a confidential informant, lest my name show up in some badly redacted court filing.

Addendum, 16 May 2008, 9pm: Ryan Singel has a nice summary on Wired's Threat Level blog, although for some reason he accused me (now fixed) of being a professor at Princeton. (I'm actually at the University of Pennsylvania, although I suppose I could move to Princeton if I ever have to enter witness protection due to a redaction error).

Addendum, 16 May 2008, 11pm: The entire Office of the Inspector General's section of the DoJ's web site (where the report had been hosted) seems to have vanished this evening, with all of the pages returning 404 errors, presumably while someone checks for other improperly sanitized documents.

Addendum, 18 May 2008, 2am: The OIG web site is now back on the air, with a new PDF of the audit report. The removable opaque layers are still there, but the entries in the redacted tables have been replaced by the letter "x". So this barn door seems now to be closed.

Photo above: A law enforcement "loop extender" phone tap, which is vulnerable to simple countermeasures by the surveillance target. This one was made by Recall Technologies, photo by me.

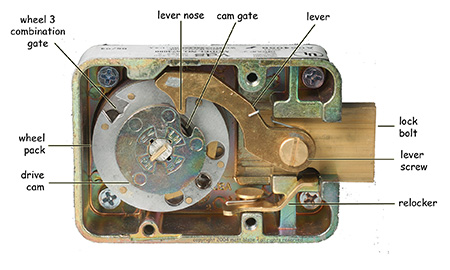

When I published Safecracking for the Computer Scientist [pdf] a few years ago,

I worried that I might be alone in harboring

a serious interest in the cryptologic aspects of physical security.

Yesterday I was delighted to discover that I had been wrong. It turns out that more than

ten years before I wrote up my safecracking survey, a detailed

analysis of the keyspaces of mechanical safe locks had already been

written, suggesting a simple and practical dictionary attack of which I was completely

unaware. But I have an excuse for my ignorance: the study was published

in secret, in Cryptologic Quarterly, a classified technical

journal of the US National Security Agency.

When I published Safecracking for the Computer Scientist [pdf] a few years ago,

I worried that I might be alone in harboring

a serious interest in the cryptologic aspects of physical security.

Yesterday I was delighted to discover that I had been wrong. It turns out that more than

ten years before I wrote up my safecracking survey, a detailed

analysis of the keyspaces of mechanical safe locks had already been

written, suggesting a simple and practical dictionary attack of which I was completely

unaware. But I have an excuse for my ignorance: the study was published

in secret, in Cryptologic Quarterly, a classified technical

journal of the US National Security Agency.

This month the NSA quietly declassified a number of internal technical and historical documents from the 1980's and 1990's (this latest batch includes more recent material than the previously-released 1970's papers I mentioned in last weekend's post here). Among the newly-released papers was Telephone Codes and Safe Combinations: A Deadly Duo [pdf] from Spring 1993, with the author names and more than half of the content still classified and redacted.

Reading between the redacted sections, it's not too hard to piece together the main ideas of the paper. First, they observe, as I had, that while a typical three wheel safe lock appears to allow 1,000,000 different combinations, mechanical imperfections coupled with narrow rules for selecting "good" combinations makes the effective usable keyspace smaller than this by more than an order of magnitude. They put the number at 38,720 for the locks used by the government (this is within the range of 22,330 to 111,139 that I estimated in my Safecracking paper).

38,720 is a lot less than 1,000,000, but it probably still leaves safes outside the reach of manual exhaustive search. However, as the anonymous* [see note below] NSA authors point out, an attacker may be able to do considerably better than this by exploiting the mnemonic devices users sometimes employ when selecting combinations. Random numeric combinations are hard to remember. So user-selected combinations are often less than completely random. In particular (or at least I infer from the title and some speculation about the redacted sections), many safe users take advantage of the standard telephone keypad encoding (e.g., A, B, and C encode to "2", and so on) to derive their numeric combinations from (more easily remembered) six letter words. The word "SECRET" would thus correspond to a combination of 73-27-38.

This "clever" key selection scheme, of course, greatly aids the attacker, reducing the keyspace of probable combinations (after "bad" combinations are removed) to only a few hundred, even when a large dictionary is used. The paper further pruned the keyspaces for targets of varying vocabularies, proposing a "stooge" list of 176 common words, a "dimwit" list of 166 slightly less common words, and a "savant" list of 244 more esoteric words. It would take less than two hours to try all of these combinations by hand, and less than two minutes with the aid of a mechanical autodialer; on average, we'd expect to succeed in half that time.

Dictionary attacks aren't new, of course, but I've never heard of them being applied to safes before (outside of the folk wisdom to "try birthdays", etc). Certainly the net result is impressive -- the technique is much more efficient than exhaustive search, and it works even against "manipulation resistant" locks. Particularly notable is how two only moderately misguided ideas -- the use of words as key material plus overly restrictive (and yet widely followed) rules for selecting "good" combinations -- combine to create a single terrible idea that cedes enormous advantage to the attacker.

Although the paper is heavily censored, I was able to reconstruct most of the missing details without much difficulty. In particular, I spent an hour with an online dictionary and some simple scripts to create a list of 1757 likely six-digit word-based combinations, which is available at www.mattblaze.org/papers/cc-5.txt . (Addendum: A shorter list of 518 combinations based on the more restrictive combination selection guidelines apparently used by NSA can be found at www.mattblaze.org/papers/cc-15.txt ). If you have a safe, you might want to check to see if your combination is listed.

As gratifying as it was to discover kindred sprits in the classified world, I found the recently released papers especially interesting for what they reveal about the NSA's research culture. The papers reflect curiosity and intellect not just within the relatively narrow crafts of cryptology and SIGINT, but across the broader context in which they operate. There's a wry humor throughout many of the documents, much like what I remember pervading the old Bell Labs (the authors of the safe paper, for example, propose that they be given a cash award for efficiency gains arising from their discovery of an optimal safe combination (52-22-37), which they suggest everyone in the government adopt).

It must be a fun place to work.

Addendum (7-May-2008): Several people asked what makes the combinations proposed in the appendix of the NSA paper "optimal". The paper identifies 46-16-31 as the legal combination with the shortest total dialing distance, requiring moving the dial a total of 376 graduations. (Their calculation starts after the entry of the first number and includes returning the dial to zero for opening.) However, this appears to assume an unusual requirement that individual numbers be at least fifteen graduations apart from one another, which is more restrictive than the five (or sometimes ten) graduation minimum recommended by lock vendors. Apparently the official (but redacted) NSA rules for safe combinations are more restrictive than mechanically necessary. If so, the NSA has been using safe combinations selected from a much smaller than needed keyspace. In any case, under the NSA rule the fastest legal word-based combination indeed seems to be 52-22-37, which is derived from the word "JABBER", although the word itself was, inexplicably, redacted from the released paper. However, I found an even faster word-based combination that's legal under more conventional rules: 37-22-27, derived from the word "FRACAS". It requires a total dial movement of only 347 graduations.

* Well, somewhat anonymous. Although the authors' names are redacted in the released document, the paper's position in a previously released and partially redacted alphabetical index [pdf] suggests that one of their names lies between Campaigne and Farley and the other's between Vanderpool and Wiley.

Computer security depends ultimately on the security of the computer -- it's an indisputable tautology so self-evident that it seems almost insulting to point it out. Yet what may be obvious in the abstract is sometimes dangerously under-appreciated in practice. Security people come predominantly from software-centric backgrounds and we're often predisposed to relentlessly scrutinize the things we understand best while quietly assuming away everything else. But attackers, sadly, are under no obligation to play to our analytical preferences. Several recent research results make an eloquent and persuasive case that a much broader view of security is needed. A bit of simple hardware trickery, we're now reminded, can subvert a system right out from under even the most carefully vetted and protected software.

Earlier this year, Princeton graduate student Alex Halderman and seven of his colleagues discovered practical techniques for extracting the contents of DRAM memory, including cryptographic keys, after a computer has been turned off [link]. This means, among other worries, that if someone -- be it a casual thief or a foreign intelligence agent -- snatches your laptop, the fact that it had been "safely" powered down may be insufficient to protect your passwords and disk encryption keys. And the techniques are simple and non-destructive, involving little more than access to the memory chips and some canned-air coolant.

If that's not enough, this month at the USENIX workshop on Large-Scale Exploits and Emergent Threats, Samuel T. King and five colleagues at the University of Illinois Urbana-Champaign described a remarkably efficient approach to adding hardware backdoors to general-purpose computers [pdf]. Slipping a small amount of malicious circuitry (just a few thousand extra gates) into a CPU's design is sufficient to enable a wide range of remote attacks that can't be detected or prevented by conventional software security approaches. To carry out such attacks requires the ability to subvert computers wholesale as they are built or assembled. "Supply chain" threats thus operate on a different scale than we're used to thinking about -- more in the realm of governments and organized crime than lone malfeasants -- but the potential effects can be absolutely devastating under the right conditions.

And don't forget about your keyboard. At USENIX Security 2006, Gaurav Shah, Andres Molina and I introduced JitterBug attacks [pdf], in which a subverted keyboard can leak captured passwords and other secrets over the network through a completely uncompromised host computer, via simple covert timing channel. The usual countermeasures against keyboard sniffers -- virus scanners, network encryption, and so on -- don't help here. JitterBug attacks are potentially viable not only at the retail level (via a surreptitious "bump" in the keyboard cable), but also wholesale (by subverting the supply chain or through viruses that modify keyboard firmware).

Should you actually be worried? These attacks probably don't threaten all, or even most, users, but the risk that they might be carried out against any particular target isn't really the main concern here. The larger issue is that they succeed by undermining our assumptions about what we can trust. They work because our attention is focused elsewhere.

An encouraging (if rare) sign of progress in Internet security over the last decade has been the collective realization that even when we can't figure out how to exploit a vulnerability, someone else eventually might. Thankfully, it's now become (mostly) accepted practice to fix newly discovered software weaknesses even if we haven't yet worked out all the details of how to use them to conduct attacks. We know from hard experience that when "theoretical" vulnerabilities are left open long enough, the bad guys eventually figure out how to make them all too practical.

But there's a different attitude, for whatever reason, toward the underlying hardware. Even the most paranoid among us often take the integrity of computer hardware as an article of faith, something not to be seriously questioned. A common response to hardware security research is bemused puzzlement at how us overpaid academics waste our time on such impractical nonsense when there are so many real problems we could be solving instead. (Search the comments on Slashdot and other message boards about the papers cited above for examples of this reaction.)

Part of the disconnect may be because hardware threats are so unpleasant to think about from the software perspective; there doesn't seem to be much we can do about them except to hope that they won't affect us. Until, of course, they eventually do.

Reluctance to acknowledge new kinds of threats is nothing new, and it isn't limited to causal users. Even the military is susceptible. For example, Tempest attacks against cryptographic processors, in which sensitive data radiates out via spurious RF and other emanations, were first discovered by Bell Labs engineers in 1943. They noticed signals that correlated with the plain text of encrypted teletype messages coming from a certain piece of crypto hardware. According to a recently declassified NSA paper on Tempest [pdf]:

Bell Telephone faced a dilemma. They had sold the equipment to the military with the assurance that it was secure, but it wasn't. The only thing they could do was to tell the Signal Corps about it, which they did. There they met the charter members of a club of skeptics who could not believe that these tiny pips could really be exploited under practical field conditions. They are alleged to have said something like: "Don't you realize there's a war on? We can't bring our cryptographic operations to a screeching halt based on a dubious and esoteric laboratory phenomenon. If this is really dangerous, prove it."Some things never change. Fortunately, the Bell Labs engineers quickly set up a persuasive demonstration, and so the military was ultimately convinced to develop countermeasures. That time, they were able to work out the details before the enemy did.

Thanks to Steve Bellovin for pointing me to www.nsa.gov/public/crypt_spectrum.cfm, a small trove of declassified NSA technical papers from the 1970's and 1980's, all of which are well worth reading. And no discussion of underlying security would be complete without a reference to Ken Thompson's famous Turing award lecture, Reflections on Trusting Trust.